Artificial Intelligence (AI) and Machine Learning (ML) have driven numerous recent, exciting, data-driven advancements in a wide range of application areas. Typical problem settings include predictive modelling (e.g., classification and regression problems) and generative modelling. Faculty in the department take a principled, rigorous approach to developing new methodologies in these areas, with applications in various scientific and engineering disciplines, and beyond.

Recent Highlights

A Theoretical Characterization of Neural Network Ensembles

G. Pleiss

Ensembles of large neural networks are increasingly common, yet recent studies have questioned their effectiveness. In a recent paper, Professor Pleiss and collaborators N. Dern and J. Cunningham offer new theoretical analysis of random feature regressors that unravels this mystery. They showed that as RF models gain more parameters than training data points, entering the so-called "overparameterization" regime, ensembles of these models are functionally equivalent to a single but larger RF model. These results challenge common assumptions about the advantages of ensembles in overparameterized settings, with troubling implications for uncertainty quantification and robustness of deep neural networks.

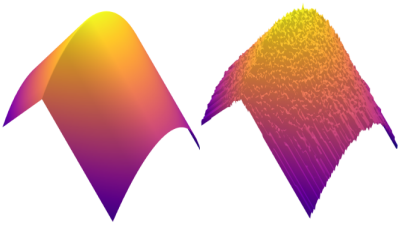

MixFlows: Principled Variational Flows

T. Campbell

Variational flows are a popular methodology in machine learning for both generative modelling and probabilistic inference. In recent work, Professor Campbell and student authors Z. Xu and N. Chen developed the first variational flows that come with rigorous, practical statistical guarantees on approximation quality. In follow-up work, the team then showed that despite the chaotic behaviour of these flows, the shadowing property can be used to obtain valid statistical results.